Comment October 7, 2019

Harnessing the Power of our Data to Protect our Fresh Water

By Lubna Seal, Junior Data Scientist

The world of environmental protection is evolving and borrowing from different sectors to find smart and efficient ways to monitor, understand and protect our ecosystems.

That’s where IISD Experimental Lakes Area comes in.

Our unparalleled 50+ year dataset on the health of five of our lakes—that tracks everything from the temperature and chemistry of the water to how many zooplankton and fish live in a lake—is being overhauled using artificial intelligence so it can open up a whole new understanding of our boreal lakes and where they are headed.

We sat down with Lubna Seal, our junior data scientist, who has been working on our unique environmental dataset to get it ready for the next stage in freshwater protection.

She explained to us why big data is the key to mitigating the impacts of climate change, and where we need to go from here.

How did you first get interested in big data?

I come from a place on the eastern coast of Bangladesh where major land areas have been inundated due to sea level rise from climate change, making it one of the most vulnerable communities to the impacts of climate change on Earth. When I came to Canada, I obtained a Master’s degree in climate change from the University of Waterloo and have worked mostly in risk and adaptation research for over half a decade now.

All of this experience has made me appreciate that, while the cause of climate change is singular (namely, an increase in greenhouse gas emissions), the impacts are so diverse and multifaceted. That’s where big data comes in for me.

Big data can shine a light on uncertainty around climate change scenarios and organize insightful information to allow decision-makers to implement policies that most effectively protect communities from climate change.

The revolution of big data can empower the communities and organizations that need the information because lots of this big data crosses political and social boundaries—especially data that’s reproducible and open.

How would you define "big data"? And why do you think it can benefit environmental research?

The definitions of big data vary from industry to industry, but most of them revolve around the idea of the 4Vs (Volume, Velocity, Variety and Veracity). That is to say, if the size of any of these Vs goes beyond the capacity of a conventional desktop computer, it can be considered big data.

Even so, the definition that I like the most is that “big data refers to large, complex, potentially linkable data from diverse sources.”

The scope and scale of environmental research continue to grow, resulting in an increasing amount of data. Every piece of research—from the exploration of ecosystem-level changes in the time of human intervention to the impact of climate change on human health—is somehow interlinked.

With high-performance computing, big data can find meaningful correlations within and across data sets and help build a holistic view of complex ecological problems. In a complex world, we need deeper insights to help us make the best possible decisions.

How do you think IISD-ELA and its unique environmental dataset can make an impact in this sphere?

For me, IISD-ELA offers a unique suite of experiments that respond to current and emerging threats to fresh water, and effectively demonstrate the cause and effect of ecosystem-level changes.

We now live at a time where environmental policy making tends to require a near-term (daily to decadal) data, and IISD-ELA can provide this near-term data on a seasonal or decadal timescale. This is critical for policy-makers to understand current trends and then make informed decisions. It can also provide long-term data on a multi-decadal timescale, necessary for prediction-based modelling whereby we use existing data and statistics to predict new outcomes. As the climate changes, we are already seeing the winter season shorten and lakes become darker coloured. We are using data science tools to explore this big data set to find more changes caused by climate and weather.

The revolution of big data can empower the communities and organizations that need the information because lots of this big data crosses political and social boundaries, especially data that’s reproducible and open.

IISD-ELA has been monitoring and tracking data from five of its lakes for its Long-Term Environmental Dataset (LTER) for over 50 years, and due to the complexity of that dataset, I would say it has entered the world of big data.

Although relatively small in volume, extensive heterogeneity in the parameters of that dataset reveal the complex relationships between multiple stressors and the health of fresh water. By putting the data together in context, we can develop greater insights and help make better decisions about the future.

What do you mean by data heterogeneity, and how can that be useful?

What I mean is that our research is not laboratory research where one can manipulate one condition and control the others. In a real-world ecosystem—like the ones on which we research—multiple stressors co-exist, so it is imperative to identify the multi-dimensional impacts from a single stressor or from multiple stressors.

For example, I was reading an interesting article on the BBC website, where David Schindler—one of our original lead researchers—was talking about the impacts of acid rain on lake trout population at IISD-ELA. He said that “lake trout stopped reproducing not because they were toxified by the acid, but because they were starving to death.” That happened when the tiny organisms on which lake trout depend for their food were dying because of the decline of calcium concentration in lake water.

What this shows is that, on top of the health of lake trout, we now also need to factor in the health of those tiny organisms to fully understand the processes at play. And with more components in play, the dataset becomes more heterogenous.

What challenges can working with big data pose? How do you think those can be addressed?

Given that we are working with massive amounts of data, the traditional approach to data management and analysis won’t suffice.

While working with the IISD-ELA data, two of the biggest challenges to big data management quickly became clear to me: structuring the data and then archiving it.

I don’t think there is any straightforward answer to structuring a highly complex heterogeneous dataset like our LTER data, especially when we are working with a wide variety of different forms of data, from images and text, to numbers and figures.

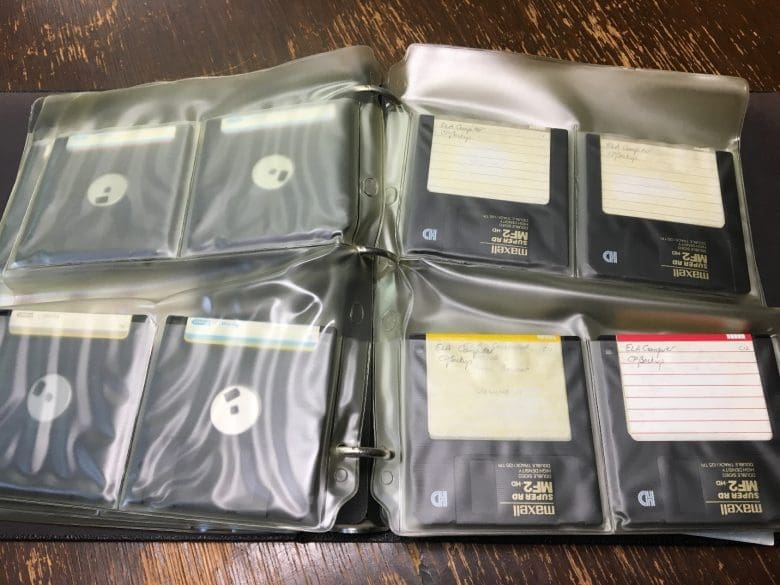

At the moment, in order to bring them to workable and comparable formats, we are adopting common management standards and speaking to other water scientists and community monitoring groups to make sure our data is understandable to anyone who might use it. We are also digitizing data—a lot of it. IISD-ELA has transitioned from printed reports to floppy disks to digital databases. We have recently developed a cloud solution for our master database to protect our data and deliver it to scientists here and around the world.

What’s next for IISD-ELA?

We are working toward making our data FAIR: Findable, Accessible, Interoperable and Reproducible. Our LTER data was collected to be used by many people and is available by request, but we estimate this is only a small portion of the total data collected at the site over the past half-century. The task is now to triage our data and make sure the most important and precious datasets are made available to scientists and the general public.

All of this lets us put the data into better context and allows scientists to spend their time doing what they do best: science.

By making sure all data has metadata—a description of how and why the data was collected—we make our dataset more reproducible and robust.

Before we can use data, we need to organize the raw data so it is accurate and useful for what we currently want to find out. The data is reproducible, which means that if another scientist comes along and wants to organize that same raw data using the same method, it will come out the same, or close enough.

There is a belief that most scientific data is facing a reproducibility crisis. Reproducibility is important to enhance data credibility and to ensure that precious data can provide new insight when analyzed further for a bigger or different purpose. I agree; in fact, in my opinion, ecological data is so precious that it shouldn’t be restricted to a single use, especially given how difficult it can be to get it in the first place.

All of this lets us put the data into better context and allows scientists to spend their time doing what they do best: science.

You know that ground-breaking freshwater research you just read about? Well, that’s actually down to you.

It’s only thanks to our generous donors that the world’s freshwater laboratory—an independent not-for-profit—can continue to do what we do. And that means everything from explore what happens when cannabis flushes and oil spills into a lake, to how we can reduce mercury in fish and algal blooms in fresh water—all to keep our water clean around the world for generations to come.

We know that these are difficult times, but the knowledge to act on scientific evidence has never been more important. Neither has your support.

If you believe in whole ecosystem science and using it to bring about real change to fresh water around the globe, please support us in any way you are able to.